A friend introduced me to the game 24, a simple game you can play with a deck of playing cards. Over the course of playing the game, I realized that either my friend was really good at this game, or that I wasn't!

Given how simple the game was, I figured that I could write a program that was good at it. Scoping the problem out, it was a perfect weekend hack project, and a good break from all the grant writing, research and administrivia of my job. Also, I have always been fascinated by all the cool things my colleagues in Computer Vision do, so it was a good way to learn about some of the fundamentals.

The Game

Here's a description of the game, from the Wikipedia article:

The 24 Game is an arithmetical card game in which the object is to find a way to manipulate four integers so that the end result is 24. Addition, subtraction, multiplication, or division, and sometimes other operations, may be used to make four digits from one to nine equal 24. For example, card with the numbers 4,7,8,8, a possible solution is the following: (7-(8/8))*4=24.

The game is fun to play 1-on-1, where the first person to come up with a valid expression gets to keep the cards. Once the deck is exhausted, the person with the most cards is declared the winner.

An Automatic Solution

My vision for an automated version of the game was simple. Players sit across a table on which the cards are laid out. My program would take a picture of the cards and recognize them. It would then generate valid expression that yielded 24, and then project the answer on to the table. Before going into the details, here's a video of how the system would work:

Parts of the Problem

Let's think through the system and break it down into parts. The heart of the problem is to search for a valid expression that yields 24. To get the digits, we have to look at the cards using a camera, and identify that they are cards. Then, we need to process each card and recognize what the digits are. Then, once we get the expression, we project them onto the table surface using a projector.

Searching for Expressions:

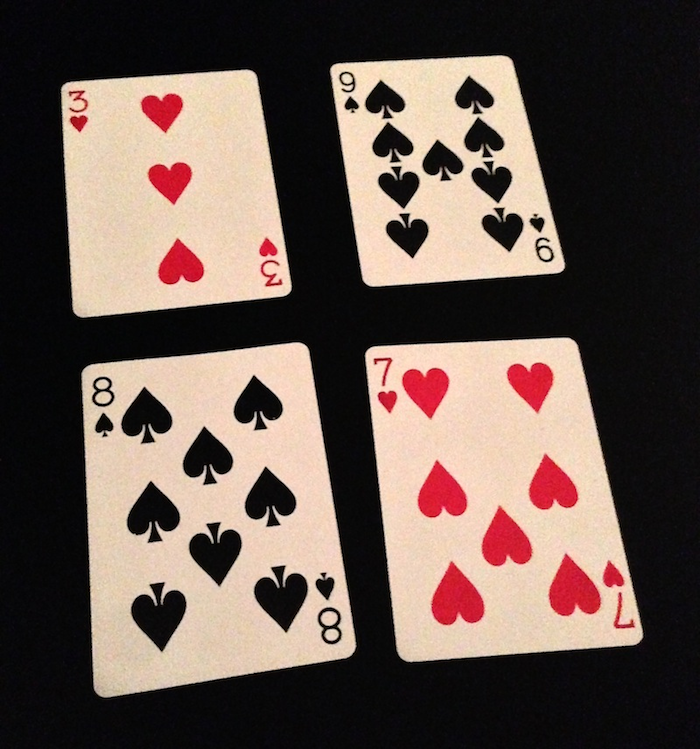

Before getting into the computer vision and image processing bits, let's get the math out of the way. Consider the following set of cards. The digits here are: 3,7,8,9.

For these 4 digits, the search space (i.e. the set of all the possible candidates) can be enumerated as:

- All the permutations of the digits (e.g. [3,7,8,9], [9,8,7,3] and so on)

- All possible operators insertions for each permutation (e.g. [3 + 7 + 8 + 9], [3 * 7 - 8 + 9])

- All possible parenthesizations of each operator configuration (e.g. 3 * [ 7 - 8 + 9 ] )

As it turns out, the number of possible candidates is bounded (for the curious, see Catalan Numbers) and hence tractable to brute force. Optimizations such as memoization (e.g. [3 * (7 + 8 + 9)] and [3 / (7 + 8 + 9)] both have the same subexpression (7 + 8 + 9) which can be computed and cached) and taking into account the associativity of operators (e.g. 3 * [ 7 - 8 + 9 ] does not need a nested parenthesis since - and + are associative.) help reduce the search space, speeding up the computation.

Note that not all digits generate valid 24 expressions, so there is a chance we'll have to say "Expression impossible".

Identifying and Registering Cards

Now that we have the solution, let's turn to the fun part -- seeing the cards! As we can see in the image above, we have a dark background for a tabletop, and the cards are laid out in a 2x2 row. They are however not quite vertically aligned, and the picture itself is at a perspective. Thus, we first need to perform image segmentation to pick out each card by itself, and then image registration to line up the picture of each card with a flat, rectangular representation of a card.

The python API for OpenCV is very easy to use for these tasks, and there's a ton of informative blog posts that help. We first read the picture and preprocess(greyscale, blur and threshold) it to get rid of artifacts so that we can focus on the real stuff:

im = cv2.imread(filename)

gray = cv2.cvtColor(im,cv2.COLOR_BGR2GRAY)

blur = cv2.GaussianBlur(gray,(1,1),1000)

flag, thresh = cv2.threshold(blur, 120, 255, cv2.THRESH_BINARY)

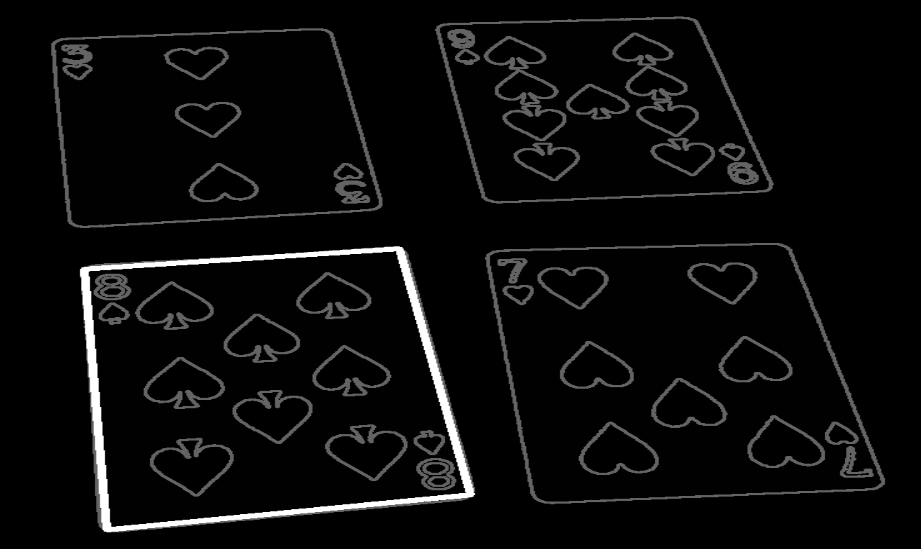

We then find all contours in the image. These can be edges of the cards themselves, or contours of the figures and letters in the cards. Thus, we look for the four contours that span the most area -- which have to be the four cards themselves.

contours, hierarchy = cv2.findContours(thresh,cv2.RETR_TREE,cv2.CHAIN_APPROX_SIMPLE)

contours = sorted(contours, key=cv2.contourArea,reverse=True)[:numcards]

This takes the original image and identifies the top 4 contours (aka the four cards), which we can then cycle through one by one. Note the grey lines in the following image -- they are the contours identified, which include the symbols on the cards themselves.

Now, for each card, we need to register each card into a rectangular representation. To do this, we identify the rectangle representation of each card. This is done by approximating a polynomial from the contour (which is simply a vector of points) and then finding the minimum rotation-free bounding box:

for i in range(numcards):

card = contours[i]

peri = cv2.arcLength(card,True)

approx = cv2.approxPolyDP(card,0.02*peri,True)

rect = cv2.minAreaRect(contours[2])

r = cv2.cv.BoxPoints(rect)

Then, for each rectangle, we perform an Affine Transform to transform the image of each card into a rectangular representation, so that it can be easily recognized:

h = np.array([ [0,0],[449,0],[449,449],[0,449] ],np.float32)

transform = cv2.getPerspectiveTransform(approx,h)

warp = cv2.warpPerspective(im,transform,(450,450))

Recognizing Cards

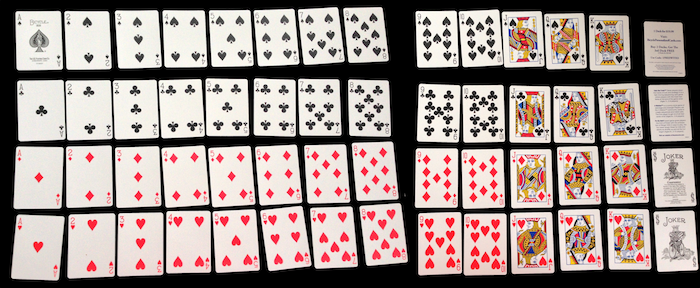

Now that we have a registered representation of the card, The next step is to recognize it. For this, we use a training deck -- to tell our code what each card looks like in the first place.

We pipe this image through the same code as above, creating registered representations of each card, and then labeling each card by hand. In my code, I created a simple tab-separated-values file that stored the card information in order of how the registered cards were listed by the code:

55 * *

54 8 S

53 K S

52 Q S

Note that "*" is used to represent Joker / other cards, which were also in the deck.

Now that we know what each card looks like, the next step is to match it up with the incoming candidate card. Both are lined up / registered, and both have been through the same preprocessing. There are a lot of robust algorithms to this problem such as SIFT and SURF which can be used for this problem: We could recognize each digit / letter using character recognition, or even recognize the suit symbols on each card and count them to get the digit.

The simplest option here, however, is to simply ask -- how different is the picture of the incoming card from each of the training card? We can simply write this as:

def preprocess(img):

gray = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

blur = cv2.GaussianBlur(gray,(5,5),2 )

thresh = cv2.adaptiveThreshold(blur,255,1,1,11,1)

blur_thresh = cv2.GaussianBlur(thresh,(5,5),5)

return blur_thresh

diff = cv2.absdiff(preprocess(img1),preprocess(img2))

diff = cv2.GaussianBlur(diff,(5,5),5)

flag, diff = cv2.threshold(diff, 200, 255, cv2.THRESH_BINARY)

print np.sum(diff)

The steps in the above lines of code are: First transform the image to a grayscale colorspace and then blur it to get rid of artifacts that arise from lighting and camera noise. Then, perform an adaptive thresholding -- i.e. highlight differences in black / white, which articulates the symbols and inscriptions in the image. To make up for slight differences in alignment, we blur the image again, before and after performing an absolute difference of one image from another, and then summing the intensity of pixels that are different.

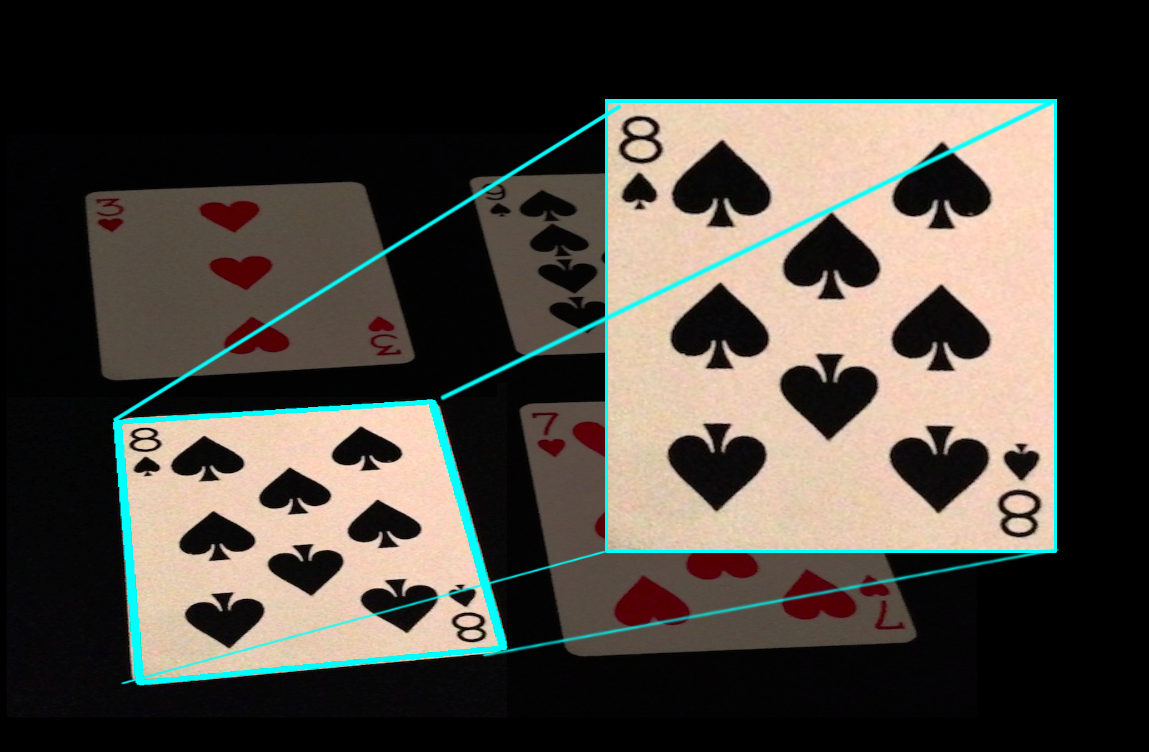

Here is the card 8 of spades being compared against Ace of Hearts. The right third of the image is the result. As you can see, the subtraction leads to a lot of artifacts:

In contrast, here's what happens when we compare the registered picture of 8 of spades from our perspective image above, against the picture of 8 of spades from the training deck. As you can see, the result is almost fully black, which means the difference is minimal!

Thus recognizing each card becomes a simple process of comparing each incoming card against each card against the deck, and going with the one with the minimum difference. As noted before, there are many more complex and robust approaches (including ones that involve the use of classifiers). However, since this is a fun project and I only had a few hours to write code, we're going to go with the version that takes the least amount of time!

One thing to note is that the card identification process could be reused for any card game -- not just this one.

Putting it Together

Given all the parts of the system, we can now get pictures from the webcam, and identify the cards from the picture. We then compute the solution for the numbers, and then print it out back onto the table by projecting it on the projector, which is connected to the computer.

training = img.get_training(training_labels_filename,training_image_filename,num_training_cards,avoid_cards)

webcam = cv2.VideoCapture(0)

cv2.namedWindow("preview")

if webcam.isOpened(): # try to get the first frame

else:

rval = False

while rval:

rval, im = webcam.read()

try:

cards = [img.find_closest_card(training,c)[0] for c in img.getCards(im,num_cards)]

cards = ['1' if c == 'A' else c for c in cards]

display(g24.solve(cards))

And there you have it, an end-to-end, automated player for the game 24! If you would like to try running the code yourself, the card recognition code, training deck image and test images are available on Github.